100% Tested is not 100% Covered

A misconception in testing that we frequently see is the view that if all the tests are run, then the system is fully tested. This leads to the risk of false positives where stakeholders see a 100% tested report and think everything is safe and ready to ship.

But what if that testing was just a drop in the ocean?

ISSUES WITH Scope

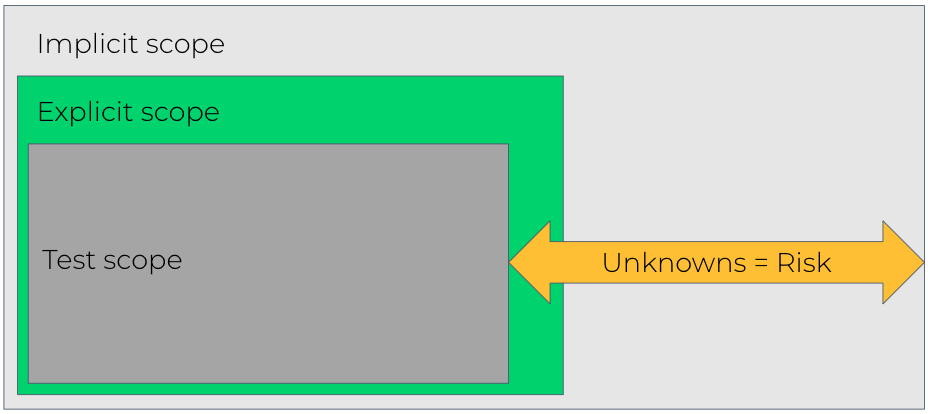

In most project methodologies there’s only a limited time for documenting the scope of what we want, whether thats user stories, requirements, specifications, designs, whatever. This means that baked into our project approaches is the idea that some scope for the project are just undocumented.

- Undocumented explicit scope, things we probably know that we want but forget to document (or don’t have time to document). Usually this is things like branding or non functional requirements.

- Implicit scope, things that the business knows they want but don’t document because they assume that everyone knows about this because its obvious. This might be domain knowledge or existing project knowledge.

- Total unknown scope, things that the project team just don’t know about because they haven’t ever come up or we lack the knowledge in the area to understand.

Where scope is undocumented (for whatever reason) even when testing covers 100% of these asks… it’s not covering 100% of what’s really needed.

Issues with testing scope

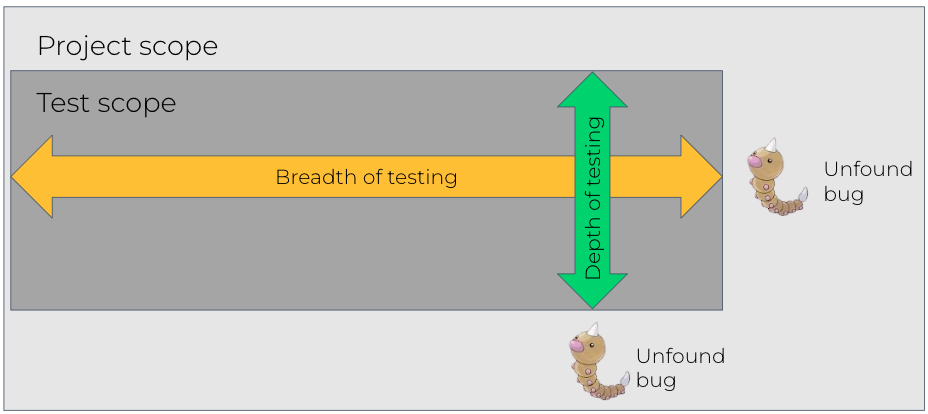

Testing can be infinite and writing tests to cover everything would take forever. Think about a field that allows you to add 20 alphanumeric characters, that’s 53 characters x 20 positions = 1060 combinations, meaning that many tests for one field (more when we add spaces and special characters and negative cases). It’s unrealistic to try to document 100% of scope for all testing we might do, which is where risk based testing is used.

But this means that even when we cover 100% of our planned tests, we know that it’s not covering 100% of what the product / feature / software can do. There’s assumptions that where we’ve seen something like that working that other things will work too.

A drop in the ocean

So, testing scope might not cover everything we’ve asked for and everything that’s been asked for might not cover everything we need. That means 100% of our tests won’t cover 100% of the project.

This leaves us with a gap in our understanding of quality and these unknowns means risk. Specifically the risk that things won’t work the way that we want them to.

Okay great, what can we do?

Like with any risk, we have to mitigate the risk of 100% testing scope being seen as 100% testing of everything in the product.

- Educating our teams, especially our stakeholders to know that even 100% of planned testing complete can still mean areas uncovered. That means accepting and understanding that there’s still risks to risks to quality after a 100% passed report.

- Sharing the scope of our testing, with our teams and stakeholders so that they can understand exactly what’s been covered and understand any implicit scope or explicit scope that hasn’t been tested.

- Sharing narrative reports, not progress reports of number of tests passed. A narratibe report detailing what’s been tested and seen will help your team understand the scope of testing and identify what’s missing.

- Be brave and be candid, don’t assume that because you can’t cover and know everything that you’re a bad tester. Don’t hide what we’ve been able to do from the team and the shortfalls in what we’ve tested. That transparency will allow for better decision making about quality.

- Ask your team & stakeholders to help, by being more explicit with asks. Challenge and question your team early on to document implicit asks and request they engage with the scope of your testing to ensure it’s everything we want covered.

That last part can be hard, especially in organisational cultures that are more focused on timelines than engineering outcomes. In those situations I’ve found that running a workshop to go over coverage early and looking for “stakeholder sign off of testing” can help. It also works to raise official risks about test coverage and the perception of test scope being used in Decision making.

Help your team to make meaningful decisions about quality. Help them to understand that even when your testing is complete, it’s not really complete (or finished).

Leave a comment