You don’t need so many E2E tests (Or do you?)

We’ve all seen the testing pyramid, a diagram that shows us that proportionally we should reduce the amount our larger tests in favour of smaller ones. Yet we see so many teams still relying primarily on larger end to end tests, why is that? More importantly, is that a problem?

End to end tests, what are they?

End to end tests are the ones we use to check that the whole code base hangs together and works, literally from one end to the other. These are usually written as user journeys, following the actions that an end user might take through the application, exercising all the different bits of code along the way.

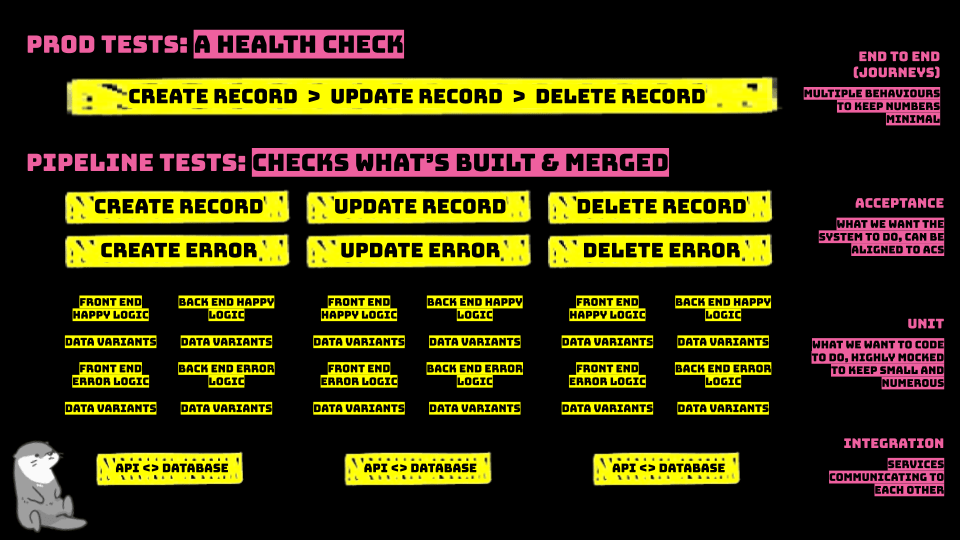

In a CI/CD pipeline, these tests aren’t actually used to prove that what we’ve built is right (or good). Rather than being functional tests, these tests are usually conceived as just being a health check for our staging and production environments. They’re an early warning for issues that might be seen across the application and also give us confidence that everything is deployed into the environment.

In the above infographic, I show how end to end tests can be used to cover health checks, whilst the validation of functionality and code logic are all covered in other, smaller, tests. This conveys that the testing of “are we building the right thing?” isn’t lost, it’s just tested elsewhere.

Why do teams create many end to end tests?

We’ve all seen the testing pyramid and have been told to minimise the amount of end to end tests we create, but quite a lot of teams still create a lot of tests at this level. There are a few reasons that I’ve observed why this might be the case:

Fallacy of first learned

For a lot of us in testing end to end tests are our first exposure to automation so they’re what we think of a “automated tests”. This can lead to us trying to use them to solve for more testing needs than we really should, like using them for functional testing and acceptance tests.

Even when we learn about and see other forms of testing that can be used, it’s natural for us to default to the first thing that we learned about. This is a natural resistance in our brain away from using something new rather than the comfort of what’s tried and tested based on our past experience.

Ease of understanding

The journeys that users go on through the system are far easier to visualise than service interaction and code for more people. For a tester who’s not familiar with engaging with code throughout the stack or a product manager whose thinking is more customer journey focused, the way they will think about verifying how correct a feature is will be at the user journey level.

Sometimes we think of automated testing as “just automate the manual testing that we did”. When our understanding is that we need to replicate what we’d do in manual testing then it’s easy to see why we’d create end to end tests. This can become prevalent when we have different types of tester working with each other and the manual testers provide a script of steps for the automators to add tests for.

Ease of implementation

Okay hot take time… not everybody who is responsible for automated tests can fluently read and write code like a software engineer could. This is fine, but it leads to needing simpler (or low code) ways to implement automated tests. That’s where record and playback, AI generated and low code test automation tools come into play! These tools help people who are not native coders to be able to create and maintain test suites and run automated tests.

Where these tend to be browser based and create end to end tests driven from the UI, we end up with many end to end tests to cover all of the behaviour of the system.

Why we might not want many end to end tests

When we talk about reducing the amount of end to end tests we have, there are usually specific reasons why we would want to do that. Here are some examples of some of the times we would want to reduce end to end tests in favour of other types of test.

Because pipelines and faster delivery

If we’re working in a team that’s making lots of changes to code through a CI/CD pipeline then we’ll want short feedback loops, to be able to reject code that doesn’t work and lots tests that run quickly to prove out behaviour. We can achieve that with:

- Smaller tests with mocks to cover up and down stream things like data set up.

- Tests that run in the pipeline, so run fewer components than the full deployment.

- Tests that run earlier in a pipeline (or locally) rather than in staging or production.

- Tests that can run in parallel because they don’t hog an environment.

This is why we tend to use code based unit and acceptance tests to validate the logic of code and behaviour of development. These are more suited to a pipeline, allowing us to validate code changes frequently and make multiple deployments.

Closer to the code is easier to debug

Teams that have shorter SLAs for turning around fixes, have large codebase real estates to maintain or a lot of complexity will want to be able to pinpoint issues quickly to be able to fix them more easily. The closer to the code your tests are, the easier it is to diagnose issues using them.

If an end to end test breaks, you know that there’s a problem with a user journey, so the issue could be in any of the services that get used in that journey. With an acceptance test failure, you know a more specific behaviour or feature that’s broken and then with unit test failures you can start to pinpoint the exact logic in which service isn’t working correctly.

That is… if you have the test coverage that supports that kind of debugging of course.

Ease of maintenance and supportability

It’s common knowledge that end to end tests are usually more flakey than other types of test, they fail randomly which means you have to spend hours debugging them. Generally the more code based tests are less flakey which results in fewer false failures and ignored tests.

- Mocked data inputs means less reliance on data being in the right state.

- Testing smaller bits means less needs to be ready for it to work.

- More immediacy of responses from services means less wait time errors.

Plus on top of this smaller tests, which are written in code, tend to be more precise and focused so it’s easy to work out what they should be doing and get them to do that; there’s less workarounds needed to get the test working which could cause failure.

A note on the supportability of AI and low code end to end tests

I’ve found that very browser based record and playback tests tend to be a real pain to get working and to then fix when they stop working. Usually some small bump in timings of a page load will throw the test, or they’ll decide to just not work intermittently and it’s really hard to see why with the (lack of) tools available.

When using AI generation to create tests there’s a risk that because the tests are not in a coding style that you’re used to that they’ll be harder to change and maintain. The generated test code may also include inefficiencies that you’d need to be able to refactor away, so make sure you have the skills in your team to do this.

Because we have it covered

With enough other test coverage like unit tests, acceptance tests and post deployment manual tests, alongside robust observability and / or user testing, your team may decide that the expense of end to end tests isn’t worth it.

Which is fine, nobody says that you have to solve the problem of health checks in production and post deployment testing through end to end automation. There’s many ways to skin a cat!

Pragmatism: When we might need more end to end tests

Lots of posts, blogs, conventional wisdom and people will point to the testing automation pyramid and say with full confidence ALWAYS MAKE LESS END TO END TESTS! Well do you know what? Sometimes you can’t do that and you need more end to end tests and I’m here to tell you when and why that is.

The code won’t take smaller tests

Some code isn’t written to be testable and so it just won’t take unit or acceptance tests. In these situations, unless you’re going to refactor everything, you may have to rely on using end to end tests to cover what you can as your primary testing method.

We work in a silo

Some organisations have a separation between development and automation testers and they’re not encouraged to talk or collaborate. In these situations the thinking about acceptance and functional testing may be thrown over the wall to the testers and all you can do is try to implement end to end tests to cover that.

Or maybe the developers have a “testing isn’t my job” mentality and you cannot get them to add closer to the code tests. Then you’ll need to do what you can to add any sort of testing, which might end up being end to end tests.

We’re not coders

As I mentioned above, the people owning the automation testing might not be native coders and wouldn’t be best placed to create closer to the code tests. Then we might want to look at using a low code, browser based, record and play back solution or even start writing end to end tests in Selenium or Cypress because there’s a lot of guides around it and it’s easier for us to get started.

We’ve always done it that way

Some testers (and teams) have just always tested in a certain way, using end to end tests. They don’t want to learn other ways and they don’t want to advocate for change because it’s hard or they don’t see the value in it. Maybe they have a preferred test approach and/or tool that they always use because it gets results for them and feels comfortable… they’ll default to creating more end to end tests.

Management says so

Sometimes it’s just that your boss wants you to work in a certain way. It’s cheaper, easier for them to understand and manage or because they’re a micromanager who doesn’t know any different. What are you gonna do?

This might be connected to BECAUSE THE AUDITORS SAY SO where the auditing body of your organisation will only accept end to end test coverage as a way of proving the quality of your system.

In these situations we’ll likely have more end to end tests and that’s fine, we’re doing what testing we can and not letting perfect be the enemy of good. Maybe it’s less efficient, but hopefully it gets the job done and helps us to have a better view of the quality of what we’re building.

So, yes, whilst sometimes its better to remove end to end tests and move down the testing pyramid there are situations were it might be better (or more pragmatic) to let that model do and keep using end to end tests.